Here is another installment from my review of the June 2012 New York State Math Regents exams.

I tend to be rather critical in my evaluation of these exams, pointing out poorly constructed, poorly phrased, and mathematically erroneous questions. However, there have been some minor improvements of late.

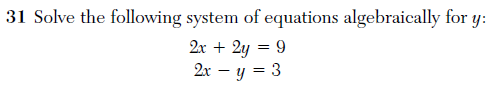

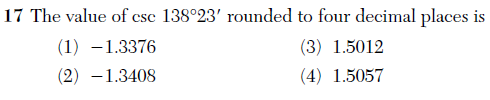

First, it seems as though, in general, the wording of questions has improved slightly. To me, questions on the June 2012 exams were more direct, specific, and clear than in the recent past.

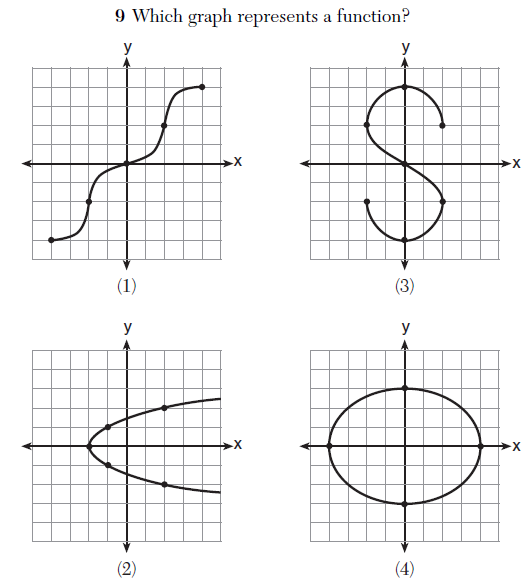

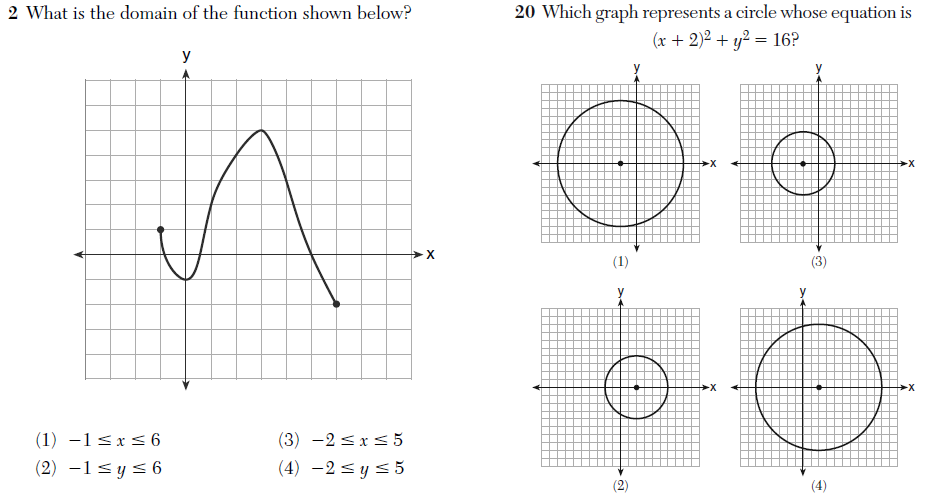

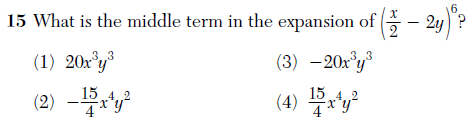

There were also some specific mathematical improvements. For example, although graphs were often unscaled, they seemed generally more precise, avoiding issues like this asymptote error.

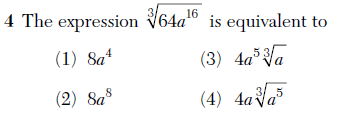

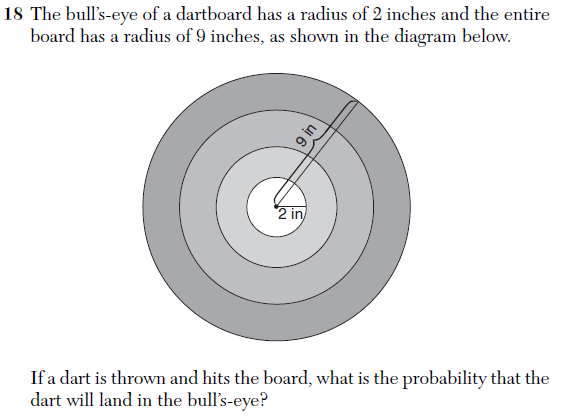

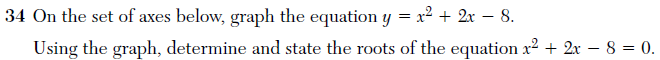

There were considerably fewer instance of non-equivalent expressions being considered equivalent. The problem below avoids the domain-issues that plagued recent exams.

Perhaps it’s just luck, but we’ll give the exam writers the benefit of the doubt for now.

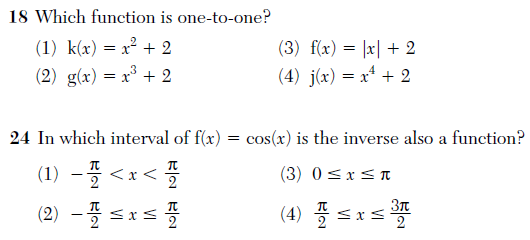

And the Algebra 2 / Trig exam definitely demonstrated a more sophisticated understanding of 1-1 and inverse functions, which is good to see in the wake of this absolute embarrassment from last year.

Perhaps someone has been reading my recaps?

Let’s hope we see continued improvement in the clarity and precision of these exams. If these exams are going to be play such an important role in today’s educational environment, it seems of utmost importance that they be accurate and well-constructed.