Superbowl Predictions

ESPN recently published a list of “expert” predictions for Superbowl 50. Seventy writers, analysts, and pundits predicted the final score of the upcoming game between the Carolina Panthers and the Denver Broncos. I thought it might be fun to crowdsource a single prediction from this group of experts.

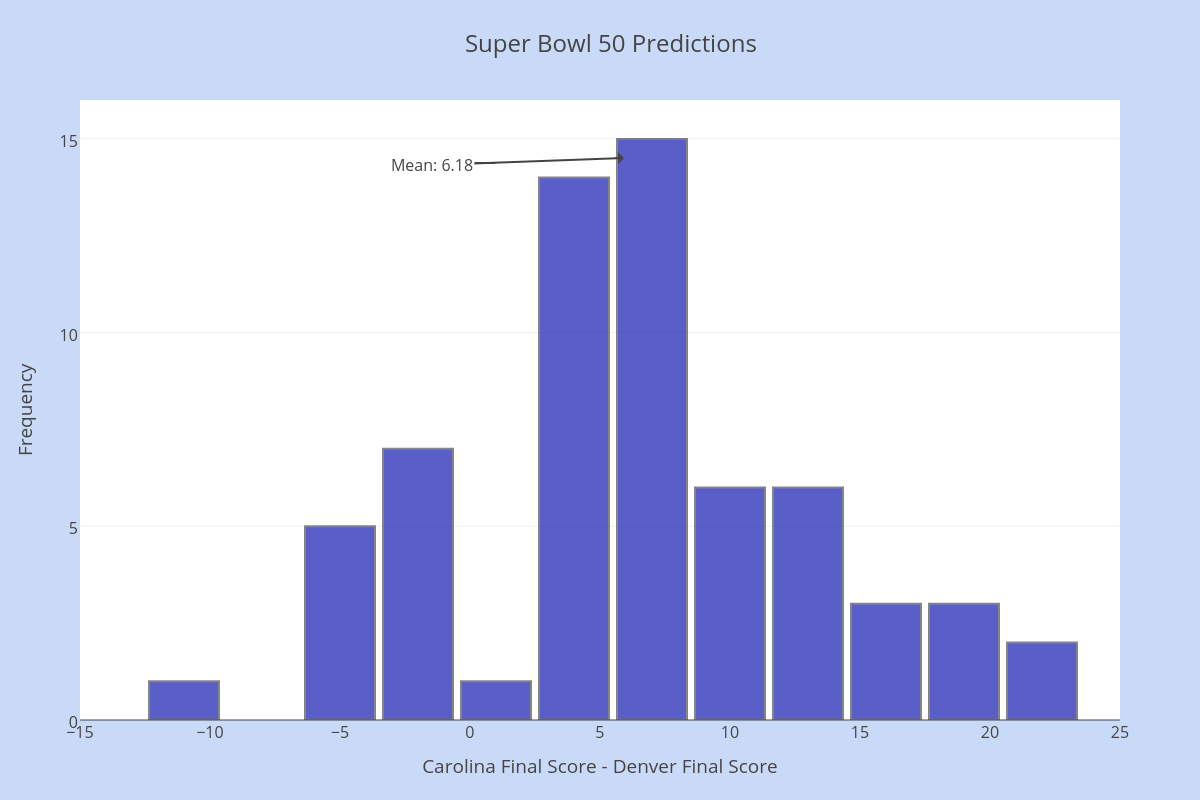

Below is a histogram showing the predicted difference between Carolina’s score and Denver’s score. The distribution looks fairly normal (symmetric and unimodal).

The average difference is 6.15 points, with a standard deviation of 7.1 points. Since we are looking at Carolina’s score – Denver’s score, these predictors clearly favor Carolina to win, by nearly a touchdown.

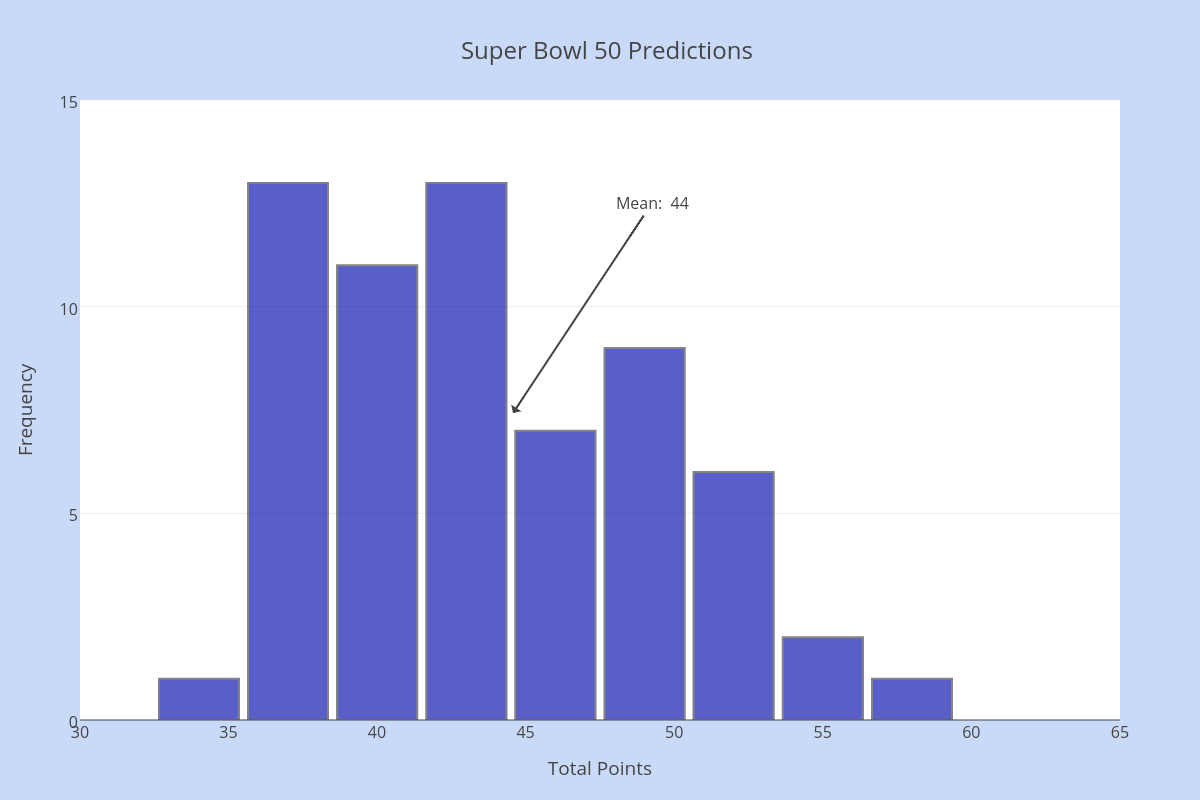

This second histogram shows the predicted total points scored in the game. The average is 44 points, with a standard deviation of 5.7 points.

Combining the two statistics, let’s say that the group of ESPN experts predict a final score of Carolina 25 – Denver 19. We’ll find out just how good their predictions are tomorrow!

[See the full list of ESPN expert predictions here.]

6 Comments

Amy Hogan · February 6, 2016 at 11:42 am

Love graphically seeing the point difference gap at 0 points.

MrHonner · February 6, 2016 at 6:33 pm

Yes, a great conversation-starter!

Sendhil Revuluri · February 6, 2016 at 5:41 pm

But the thing is that 25 is a very unlikely final team score, and 19 is rather unlikely as well. (See, for instance, http://knowmore.washingtonpost.com/2014/12/03/the-most-common-score-in-the-nfl-is-two-touchdowns-and-a-field-goal/.)

So I don’t think an expert would be picking 25–19 as the most likely final score — but perhaps if the “cost function” were built to get “close” to the final score with maximum likelihood. Interesting question of statistics of joint distributions with discreteness…

MrHonner · February 6, 2016 at 6:35 pm

I think the most interesting aspect of all this is quantifying the quality of the prediction after-the-fact. As you point out, this prediction is unlikely to be exactly correct, but it may end up being closest on a metric (or metrics) we design to evaluate the picks.

Wahaj · February 16, 2016 at 5:26 pm

As a digression, I would recommend the book Superforecasting by Philip E. Tetlock and Dan Gardner. It’s interesting how many “non-experts” there are, capable of making better predictions, consistently, concerning score/point distributions on a number of popularly forecasted topics. Historically the forecasts of these so called “experts” go unchecked (primarily due to their credentials), where most of their predictions are outperformed by random walks.

MrHonner · February 16, 2016 at 6:58 pm

Recommendation noted. I’ve added it to my list, which at this rate, means it will likely be read in 2019.