I’ve been writing critically about the New York State Regents exams in mathematics for many years. Underlying all the erroneous and poorly worded questions, problematic scoring guidelines, and inconsistent grading policies, is a simple fact: the process of designing, writing, editing, and administering these high-stakes exams is deeply flawed. There is a lack of expertise, supervision, and ultimately, accountability in this process. The June, 2017 Geometry exam provides a comprehensive example of these criticisms.

The New York State Education Department has now admitted that at least three mathematically erroneous questions appeared on the June, 2017 Geometry exam. It’s bad enough for a single erroneous question to make it onto a high-stakes exam taken by 100,000 students. The presence of three mathematical errors on a single test points to a serious problem in oversight.

Two of these errors were acknowledged by the NYSED a few days after the exam was given. The third took a little longer.

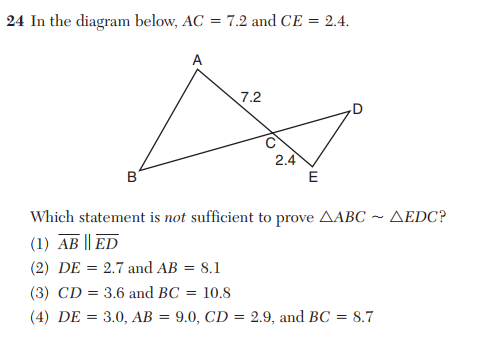

Ben Catalfo, a high school student in Long Island, noticed the error. He brought it to the attention of a math professor at SUNY Stonybrook, who verified the error and contacted the state. (You can see my explanation of the error here.) Apparently the NYSED admitted they had noticed this third error, but they refused to do anything about it.

It wasn’t until Catalfo’s Change.org campaign received national attention that the NYSED felt compelled to publicly respond. On July 20, ABC News ran a story about Catalfo and his petition. In the article, a spokesperson for the NYSED tried to explain why, even though Catalfo’s point was indisputably valid, they would not be re-scoring the exam nor issuing any correction:

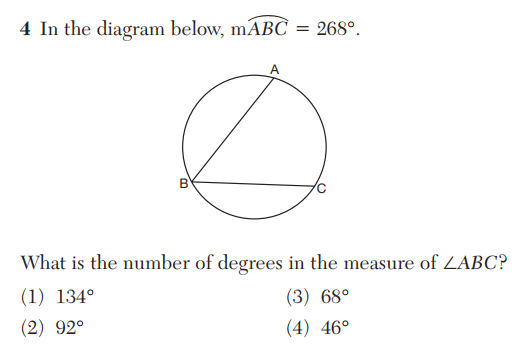

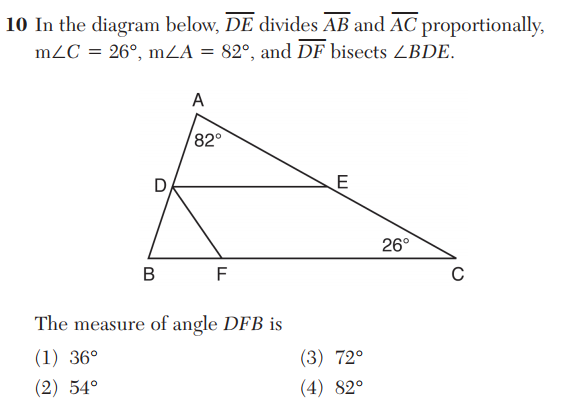

“[Mr. Catalfo] used mathematical concepts that are typically taught in more advanced high school or college courses. As you can see in the problem below, students weren’t asked to prove the theorem; rather they were asked which of the choices below did not provide enough information to solve the theorem based on the concepts included in geometry, specifically cluster G.SRT.B, which they learn over the course of the year in that class.”

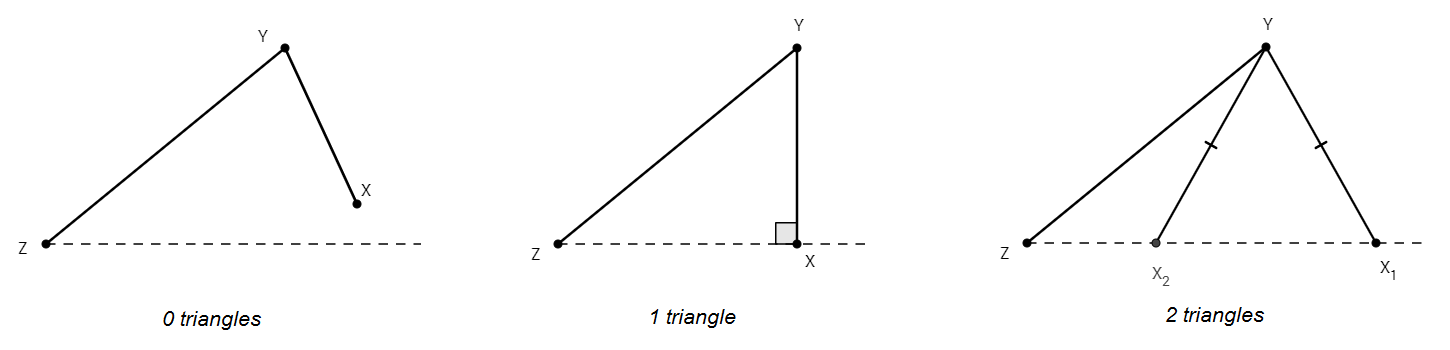

There is a lot to dislike here. First, Catalfo used the Law of Sines in his solution: far from being “advanced”, the Law of Sines is actually an optional topic in NY’s high school geometry course. Presumably, someone representing the NYSED would know that.

Second, the spokesperson suggests that the correct answer to this test question depends primarily on what was supposed to be taught in class, rather than on what is mathematically correct. In short, if students weren’t supposed to learn that something is true, then it’s ok for the test to pretend that it’s false. This is absurd.

Finally, notice how the NYSED’s spokesperson subtly tries to lay the blame for this error on teachers:

“For all of the questions on this exam, the department administered a process that included NYS geometry teachers writing and reviewing the questions.”

Don’t blame us, suggests the NYSED: it was the teachers who wrote and reviewed the questions!

The extent to which teachers are involved in this process is unclear to me. But the ultimate responsibility for producing valid, coherent, and correct assessments lies solely with the NYSED. When drafting any substantial collaborative document, errors are to be expected. Those who supervise this process and administer these exams must anticipate and address such errors. When they don’t, they are the ones who should be held accountable.

Shortly after making national news, the NYSED finally gave in. In a memo distributed on July 25, over a month after the exam had been administered, school officials were instructed to re-score the exam, awarding full credit to all students regardless of their answer.

And yet the NYSED still refused to accept responsibility for the error. The official memo read

“As a result of a discrepancy in the wording of Question 24, this question does not have one clear and correct answer. “

More familiar nonsense. There is no “discrepancy in wording” here, nor here, nor here, nor here. This question was simply erroneous. It was an error that should have been caught in a review process, and it was an error that should have been addressed and corrected when it was first brought to the attention of those in charge.

From start to finish, we see problems plaguing this process. Mathematically erroneous questions regularly make it onto these high stakes exams, indicating a lack of supervision and failure in management of the test creation process. When errors occur, the state is often reluctant to address the situation. And when forced to acknowledge errors, the state blames imaginary discrepancies in wording, typos, and teachers, instead of accepting responsibility for the tests they’ve mandated and created.

There are good things about New York’s process. Teachers are involved. The tests and all related materials are made entirely public after administration. These things are important. But the state must devote the leadership, resources, and support necessary for creating and administering valid exams, and they must accept responsibility, and accountability, for the final product. It’s what New York’s students, teachers, and schools deserve.

Related Posts

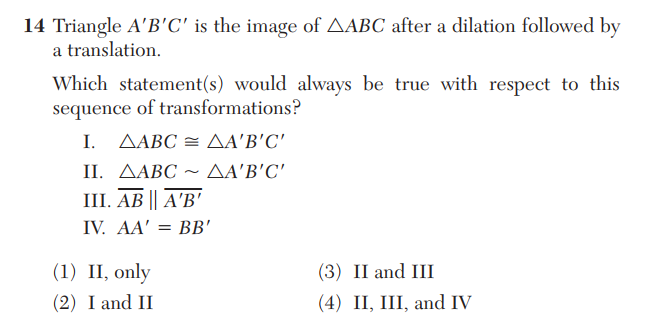

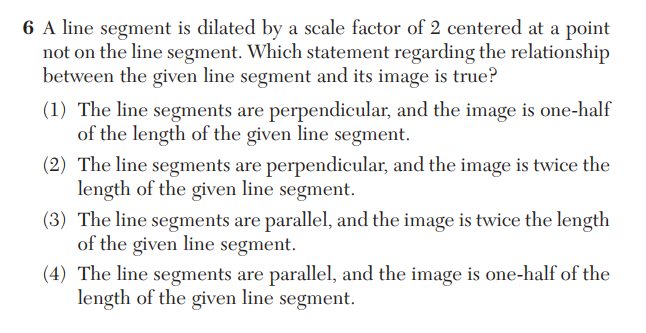

This question not only addresses the same mathematical content, it makes the mathematics the explicit focus. This would seem to be a desirable quality in a mathematical assessment item.

This question not only addresses the same mathematical content, it makes the mathematics the explicit focus. This would seem to be a desirable quality in a mathematical assessment item.