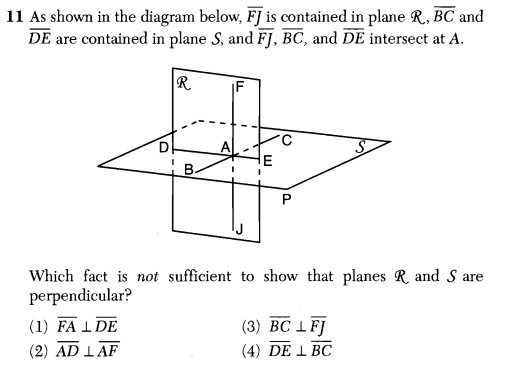

As part of my review of the 2012 August New York State Math Regents exams, I came upon this question, which rivals some of the worst I have seen on these tests. This is #11 from the Geometry exam.

This question purports to be about knowing when we can conclude that two intersecting planes are perpendicular. Sadly, the writers, editors, and publishers responsible for this question clearly do not understand the mathematics of this situation.

Each of the answer choices is a statement about two lines in the given planes being perpendicular. The problem suggests that three of these statements provide sufficient information to conclude that the given planes are perpendicular. The student’s task is thus to identify which one of the four statements does not provide sufficient information to draw that conclusion.

There is a serious and substantial flaw in the reasoning that underlies this question:

Knowing that two lines are perpendicular could never be sufficient information to conclude that two containing planes are perpendicular.

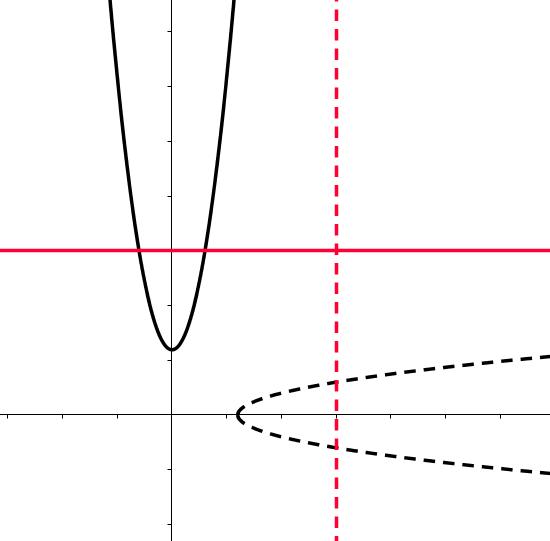

In fact, given any two intersecting planes, you can always find two perpendicular lines contained therein, regardless of the nature of their intersection. A simple demonstration of this fact can be seen here. Thus, knowing that the planes contain a pair of perpendicular lines tells you nothing at all about how the planes intersect.

It’s not that this particular question has no correct answer; it’s that the suggestion that this question could have an answer demonstrates a total lack of understanding of the relevant mathematics.

How much does this matter? It’s a two-point question, it’s flawed, so we throw it out. No harm done, right?

Well, imagine a student taking this exam, whose grade for the entire year, or perhaps even their graduation, depends on the outcome of this test. Imagine the student encountering this problem, a problem that not only has no correct answer, but whose very statement is at odds with what is mathematically true. It’s not out of the realm of possibility that in struggling to understanding this erroneously-conceived question, a student might get rattled and lose confidence. Test anxiety is a well-known phenomenon. The effect of this problem may well extend past the two points.

Teachers are also affected. A teacher’s job may depend upon how students perform on these exams, but there isn’t any discussion about their validity. A completely erroneous question makes it through the writing, editing, and publishing process, and has an unknown affect on overall performance. After thousands of students have taken the exam, a quiet “correction” is issued, and the problem is erased from all public versions of the test.

What’s most troubling about this, to me, is that this is not an isolated incident. Year after year, problems like this appear on these exams. And when confronted with criticism, politicians, executives, and administrators dismiss these errors as “typos”, or “disagreements in notation.”

These aren’t typos. These aren’t disagreements about notation. These are mathematically flawed questions that appear on exams whose express purpose is to assess the mathematical knowledge of students and, indirectly, the ability of teachers to teach that knowledge. If the writers of these exams regularly demonstrate a lack of mathematical understanding, how can we use these exams to decide who deserves to pass, who deserves to graduate, and who deserves to keep their jobs?

Related Posts

- Regents Recaps

- The Worst Regents Question of All Time?

- More Trouble With Functions

- Regents Recap — June 2016: Algebra is Hard