There’s something especially appealing, and uncommon, about these concentric octagons. I like the isosceles triangles radiating outward, and the pairs of perpendicular lines that meet at the center.

Related Posts

There’s something especially appealing, and uncommon, about these concentric octagons. I like the isosceles triangles radiating outward, and the pairs of perpendicular lines that meet at the center.

Related Posts

Here is another installment in my series reviewing the NY State Regents exams in mathematics.

June, 2014 saw the administration of the first official Common Core Regents exam in New York state, Algebra I (Common Core). Roughly speaking, this exam replaces the Integrated Algebra Regents exam, which is the first of the three high school level math Regents exams in New York.

One of the biggest differences in the two exams is how raw scores were converted to scaled scores. In the graph below, Integrated Algebra is represent in blue, Algebra I (Common Core) in orange. Raw scores are on the horizontal axis, and scaled scores are on the vertical axis.

The raw passing score (a scaled score of 65) is roughly the same for both exams: 30/87 for IA, and 31/86 for CC. But notice the divergence in the plots after a raw score of 30. This is because the raw “Mastery Score” (a scaled score of 85) is quite different for the two exams: 65/87 on IA, vs 75/86 on CC.

It’s curious that the exams could be evaluated in such a way that passing requires the same raw score on both, but mastery requires a much higher score on one than the other. If the exams were of equal difficulty, this would mean the same percentage of students would pass the exam, while dramatically fewer students would achieve mastery on the Common Core exam.

This is especially curious, since the tests don’t really seem that different to me apart from some substantial changes in content emphasis. It’s hard not to see this as merely a deliberate decision to lower the mastery rate.

Furthermore, the consensus is that raw scores on the Common Core exam are substantially lower than on the Integrated Algebra exam. Based on the exams and on conversations I’ve had, I wouldn’t be surprised if scores on the Common Core exam were lower than scores on the Integrated Algebra exam by 10 raw points on average. This would lead to an even larger drop in mastery rates, as well as a drop in passing rates.

There’s an interesting opportunity here, though. I’m certain that a large number of students across New York state took both the Algebra I (Common Core) exam and the Integrated Algebra exam. This offers a unique opportunity to directly compare the tests and the conversion charts the state decided upon.

I think the Department of Education should release that data so we can see for ourselves just how different student performance was on these two exams, and judge for ourselves the consequences of these very different conversion charts.

Related Posts

Here is another installment in my series reviewing the NY State Regents exams in mathematics.

June, 2014 saw the administration of the first official Common Core Regents exam in New York state, Algebra I (Common Core). Roughly speaking, this exam replaces the Integrated Algebra Regents exam, which is the first of the three high school level math Regents exams in New York.

The rhetoric surrounding the Common Core initiative often includes phrases like “deeper understanding”, and the standards themselves speak directly to students communicating about mathematics. These are noble goals.

So, when it comes to the Common Core exams, it’s not surprising that we see directives like “Explain how you arrived at your answer” and “Explain your answer based on the graph drawn” more often.

But including such phrases on exams won’t accomplish much if the way the student answers are assessed doesn’t change. Here’s number 28 from the Algebra I (Common Core) exam, together with its scoring rubric.

Notice that the scoring rubric gives no indication as to what constitutes a “correct explanation”. When scoring these exams, groups of readers are typically given a few samples of student work and discuss what a “correct explanation” looks like. But people grading these exams often have drastically different ideas about what constitutes justification and explanation. Given the importance Common Core seems to attach to explanation, I’m surprised that the scoring rubric takes no official position here. In fact, this rubric is essentially identical to those used for the pre-Common Core Integrated Algebra exam.

There’s a real danger in simply tacking on generic “Explain … / Describe …” directives to exam items. Consider number 34 from the Algebra I (Common Core) exam.

It’s not really clear to me what a valid response to the directive “Describe how your equation models the situation” would look like. Nor an invalid response, for that matter. So what do students make of such of a directive? I suspect that, for many, it just becomes another part of the meaningless background noise of standardized testing, another place where they simply have to guess what the test-makers want to hear. And according to the rubric, the test-graders will have to guess, too.

Yes, students should be communicating about mathematics, their processes, and their ideas. But just adding “Explain how you got your answer” to a test question isn’t going to do much to help achieve that goal.

Related Posts

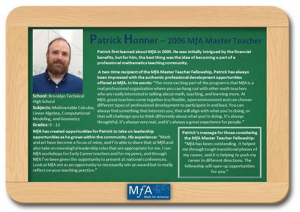

Math for America is an outstanding professional organization that focuses on training and retaining high quality math and science teachers. MfA is running an ongoing series of profiles on Facebook on members of their community, and I am proud to be one of the subjects.

Math for America is an outstanding professional organization that focuses on training and retaining high quality math and science teachers. MfA is running an ongoing series of profiles on Facebook on members of their community, and I am proud to be one of the subjects.

One of the best aspects of being a part of the MfA community is the depth and variety of opportunities for real professional growth. Here’s a quote from my interview:

“You can always find something that interests you, that will align with what you’re doing, or that will challenge you to think differently about what you’re doing. It’s always thoughtful, it’s always real, and it’s always a great experience.

You can see the entire profile here.

Math for America has been active in reshaping the mathematics education community in New York City for the past 12 years, and now operates in several cities nationwide. MfA also serves as a model for state Master Teacher programs, which are emerging across the country. It has had a substantial impact on my career, and the career of many colleagues, and I’m proud to be an active part of the community.