This is the fourth entry in a series examining the 2011 NY State Math Regents exams. The basic premise of the series is this: If the tests that students take are ill-conceived, poorly constructed, and erroneous, how can they be used to evaluate teacher and student performance?

This is the fourth entry in a series examining the 2011 NY State Math Regents exams. The basic premise of the series is this: If the tests that students take are ill-conceived, poorly constructed, and erroneous, how can they be used to evaluate teacher and student performance?

In this series, I’ve looked at mathematically erroneous questions, ill-conceived questions, and under-represented topics. In this entry, I’ll look at a question that, when considered in its entirety, is the worst Regents question I have ever seen.

Meet number 32 from the 2011 Algebra II / Trigonmetry Regents exam:

If  , find

, find  .

.

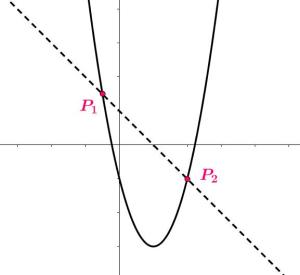

This is a fairly common kind of question in algebra: Given a function, find its inverse. The fact that this function doesn’t have an inverse is just the beginning of the story.

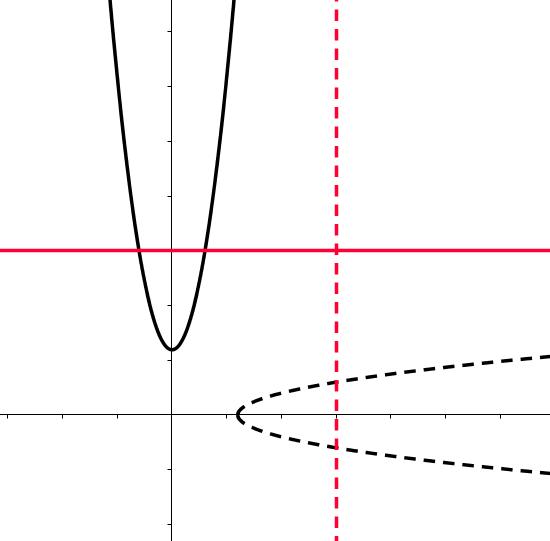

In order for a function to be invertible it must, by definition, be one-to-one. This means that each output must come from a single, unique input. The horizontal line test is a simple way to check if a function is one-to-one. In fact, this test exists primarily to determine if functions are invertible or not.

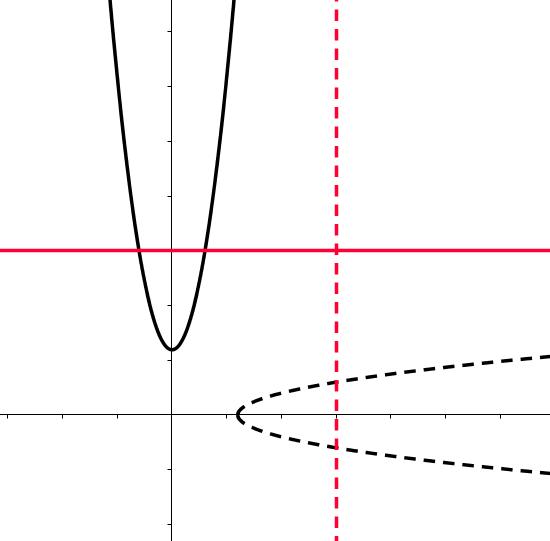

The above function  fails the horizontal line test and thus is not invertible. Therefore, the correct answer to this question is “This function has no inverse”. And now the trouble begins.

fails the horizontal line test and thus is not invertible. Therefore, the correct answer to this question is “This function has no inverse”. And now the trouble begins.

Let’s take a look at the official scoring guide for this two-point question.

[2]  , and appropriate work is shown.

, and appropriate work is shown.

This is a common wrong answer to this question. If a student mindlessly followed the algorithm for finding the inverse (swap x and y, solve for y) without thinking about what it means for a function to have an inverse, this is the answer they would get. According to the official scoring guide, this wrong answer is the only way to receive full credit.

It gets worse. Here’s another line from the scoring guide.

[1] Appropriate work is shown, but one conceptual error is made, such as not writing  with the radical.

with the radical.

In summary, you get full credit for the wrong answer, but if you forget the worst part of that wrong answer (the  sign), you only receive half credit! So someone actually scrutinized this problem and determined how this wrong answer could be less correct. The irony is that this conceptual error might actually produce a more sensible answer. The further we go, the less the authors seem to know about functions.

sign), you only receive half credit! So someone actually scrutinized this problem and determined how this wrong answer could be less correct. The irony is that this conceptual error might actually produce a more sensible answer. The further we go, the less the authors seem to know about functions.

And it gets even worse. Naturally, teachers were immediately complaining about this question. A long thread emerged at JD2718’s blog. Math teachers from all over New York state called in to the Regents board, which initially refused to make any changes. A good narrative of the process can be found at JD2718’s blog, here.

The next day, the state gave in and issued a scoring correction: Full credit was to be awarded for the correct answer, the original incorrect answer, and two other incorrect answers. By accepting four different answers, including three that were incorrect, you might think the Regents board would have no choice but to own up to their mistake. Quite the opposite.

Here’s the opening text of the official Scoring Clarification from the Office of Assessment Policy:

Because of variations in the use of  notation throughout New York State, a revised rubric for Question 32 has been provided.

notation throughout New York State, a revised rubric for Question 32 has been provided.

There are no variations in the use of this notation, unless they wish to count incorrect usage as a variation. I understand that it would be embarrassing to admit the depth of this error, which speaks to a lack of oversight in this process, but this meaningless explanation looks even worse. This is a transparent attempt to sidestep responsibility, or, accountability, in this matter.

It’s not just that an erroneous question appeared on a state exam. First, someone wrote this question without understanding its mathematical consequences. Next, someone who didn’t know how to solve the problem created a scoring rubric for it, and in doing so demonstrated even further mathematical misunderstanding. Then, all of this material made it through quality-control and into the hands of tens of thousands of students in the form of a high-stakes exam. And in the end, facing a chorus of legitimate criticism and complaint, those in charge of the process offer up the lamest of excuses in an attempt to save face and eschew responsibility.

It might not seem like such a big deal. But what if your graduation depended on it? Or your job? Or your school’s very existence? Then it’s a big deal. At least, it should be.

Related Posts

When it comes to educational testing, the stakes are higher than ever. For a student, tests might determine which public schools you can attend, if and when you graduate, and which colleges are available to you. For schools and districts, aggregate test scores and the “progress” they show might determine what kind of state and federal aid is available.

When it comes to educational testing, the stakes are higher than ever. For a student, tests might determine which public schools you can attend, if and when you graduate, and which colleges are available to you. For schools and districts, aggregate test scores and the “progress” they show might determine what kind of state and federal aid is available.