Here is another installment in my series reviewing the NY State Regents exams in mathematics.

The January 2013 math Regents exams contained many of the issues I’ve complained about before: lack of appreciation for the subtleties of functions, asking for middle terms, non-equivalent equivalent expressions, and the like.

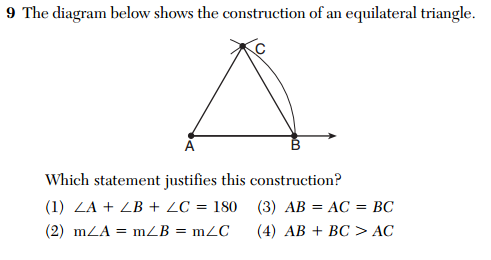

I’ve chronicled some of the larger issues I saw this January here, but there were a few irritating problems that didn’t quite fit elsewhere. Like number 9 from the Geometry exam.

First of all, I don’t really understand why we bother writing multiple choice questions about constructions instead of just having students perform constructions. Setting that issue aside, this question is totally pointless.

The triangle is equilateral. Regardless of how it was constructed, the fact that AB = AC = BC will always justify its equilateralness. Under no circumstance could the fact that AB = AC = BC not justify a triangle is equilateral. The construction aspect of this problem is entirely irrelevant.

Next, I really emphasize precise use of language in math class. In my opinion, in order to think clearly about mathematical ideas, you need to communicate clearly and unambiguously about them. The wording of number 32 from the Algebra 2 / Trig exam bothers me.

What does the answer mean in the phrase “express the answer in simplest radical form”? Presumably it means “the two solutions to the equation”, but “answer” is singular. And if it means “the set of solutions”, well, you can’t put a set in simplest radical form.

Are we trying to trick the students into thinking there’s only one solution? Or is this just a lazy use of the word “answer”, like the way students lazily use the word “solve” to mean dozens of different things? I understand that this is nit-picking, but this is a particular pet peeve on mine.

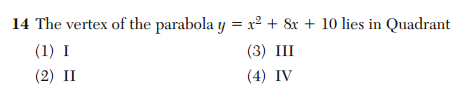

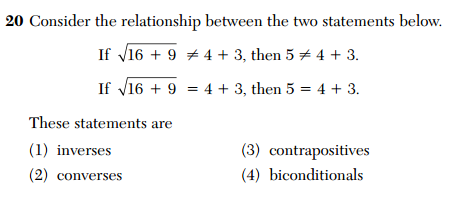

Lastly, number 20 from the Geometry exam is simply absurd. Just looking at it makes me uncomfortable.

I’m sure we can find a better way to test knowledge of logical relationships than by promoting common mathematical errors!

I’m sure we can find a better way to test knowledge of logical relationships than by promoting common mathematical errors!