Regents Recap — January 2013: Question Design

Here is another installment in my series reviewing the NY State Regents exams in mathematics.

One consequence of scrutinizing standardized tests is a heightened sense of the role question design plays in constructing assessments.

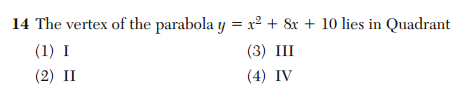

Consider number 14 from the Integrated Algebra exam.

In order to correctly answer this question, the student has to do two things: they need to locate the vertex of a parabola; and they need to correctly name a quadrant.

Suppose a student gets this question wrong. Is it because they couldn’t find the vertex of a parabola, or because they couldn’t correctly name the quadrant? We don’t know.

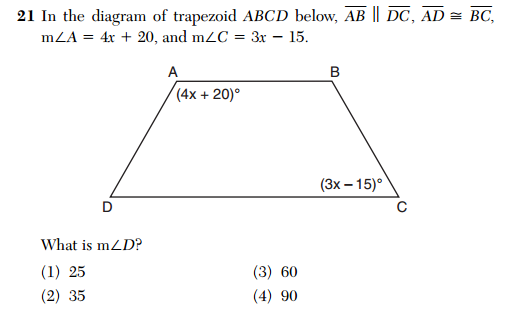

Similarly, consider number 21 from the Geometry exam.

This is a textbook geometry problem, and there’s nothing inherently wrong with it. But if a student gets it wrong, we don’t know if they got it wrong because they didn’t understand the geometry of the situation, or because they couldn’t execute the necessary algebra.

Using student data to inform instruction is a big deal nowadays, and collecting student data is one of the justifications for the increasing emphasis on standardized exams. But is the data we’re collecting meaningful?

If a student gets the wrong answer, all we know is that they got the wrong answer. We don’t know why; we don’t know what misconceptions need to be corrected. In order to find out, we need to look at student work and intervene based on what we see.

And what if a student gets the right answer? Well, there is a non-zero chance they got it by guessing. In fact, on average, one out of four students who has no idea what the answer is will correctly guess the right answer. So a right answer doesn’t reliably mean that the student knows how to solve this problem, anyway.

So what then, exactly, is the purpose of these multiple choice questions?

2 Comments

Marshall Thompson · March 4, 2013 at 9:22 am

In their defense, I doubt they would consider this test formative. Correct me if I’m wrong, but they probably only care whether or not the student knows the answer, for the main purpose of calling out schools as being underperforming.

That’s how it is in my state, anyway.

MrHonner · March 4, 2013 at 11:38 am

That’s a fair point when it comes to these terminal exams, Marshall. I’m sure not sure anything produced in the name of “data-driven instruction” would be any different, though.